🌲 Prompt engineering gave me empathy for my bosses

How feedback from superiors, prompt engineering, and giving teenaged helpers tasking helped me refine my communication style.

One of the unexpected benefits of practicing talking to computers has been getting better at communicating with humans, too.

Throughout my professional life, a consistent piece of feedback from various bosses has been that I have a tendency to bury the lede. It's a habit I picked up in school, I think: teachers ingrained the need for long introductions before getting to the thesis statement. It's also a bit of defensive judo that comes from a sense of imposter syndrome, where I put all of my justifications for my opinions at the beginning instead of just making my claim. Then of course there’s a certain element of prioritizing fast over well crafted, because I'm in a hurry to get my thoughts down and don't always take the time to go back and edit.

Knowing your flaws — and the reasons behind them — is only half the battle to fixing them, though, or honestly maybe just a third. First comes knowledge, then comes a ham-fisted series of attempts to improve (often hampered by forgetfulness), then (slowly) comes enough understanding of the problem to leverage metacognition for real improvement.

Now, thanks to some recent experiments with prompt engineering Readwise’s Ghostreader1, I think I have a better understanding of why I was getting those directions.

LLMs have a lot in common with students

A particularly grating aspect of current large language models, including the ones I frequently work with, is their habit of restating questions before providing answers. I assume their training data contains a lot of stuff common to the classroom environment — where students rephrase the question to ensure clarity and context, then answer it.

Students of course do this because their teachers have demanded it. There’s an impulse to say that this is because schools are — but truthfully, we do this because we’re training people for the real world. It’s the same with outlines; most essays written in school are not complex enough to really need one, but teachers try to force what feels like training wheels anyway, because one day you may find yourself writing a hundred-page dissertation or a 4,000 word product update and having an idea of how to outline is super helpful for that.

Similarly, adding context to the beginning of a sentence or paragraph is often useful — tweets, for example, get decontextualized from the original question pretty easily, so “just” answering a question isn’t always a great idea. This isn’t really a problem on school assignments. Teachers generally know what the assigned question was — when I used to spend an evening reading a hundred student essays all on the same topic, the restatements all blurred together into an indistinguishable mass. Confirming mastery of the skill was important, but the interesting part of the essay was always the claims and, crucially, the evidence and justification for those claims.

When I realized this, I added the following line to my favorite prompt:

Write no more than ONE thesis statement, like one would find at the end of an AP history test’s introductory paragraph.

We want different things from LLMs and students

When I’m reading a summary written by a large language model, though, I am “grading” it on different criteria than a sixth grader or an AP World History student. A LLM is not training to be a jack of all trades or getting a PhD in history; they serve, at their best, as rubber duckies and fast but unskilled secretary with a great attitude and godawful comprehension skills.

As with what I imagine managing such a secretary might be like, I found that the best way to prompt engineer AI and large language models is to address pain points very specifically, re-emphasizing important directions, and making sure to signpost2 the critical details.

My first, most common pain point? As with student essays, the introduction is often pointless fluff. So my favorite prompt engineering hack lately has been to emphasize to the large language model that it should skip restating the question, provide no context, and avoid saying things like “the document says” or “the author claims” — and just make three bold claims.

LLMs can be succinct if you force it

I don’t know anything about the training data that goes into large language models like ChatGPT, but I know the internet, and I know it’s got a ton of guides on things like writing college-level essays and writing loglines for pitching novels — a simple prompt like “write a logline for Silence of the Lambs” returns a pretty credible succinct summary that is way better than a general request for a summary of the movie.

A young FBI trainee must enlist the help of an imprisoned and manipulative cannibalistic serial killer to catch another serial killer who is still at large and claiming female victims.

As with many things in life, specificity (here, of the type of summary I want) helps a lot.

The upshot of this for me personally is that I've become a lot more aware of what it feels like to pay careful attention to conversational patterns and make suggestions to optimize them. Basic self-awareness has made me more cognizant of my own similar foibles, and that I'm now paying more attention to things that annoy me, I’m better able to empathize with other people I might be annoying. It’s pretty similar to how I’ve deliberately modeled my social media and blogging habits after my preferences as a reader, except ChatGPT is the first opportunity I’ve ever had to really put myself into managerial shoes.

ChatGPT has made me a lot more aware of why my conversational foibles can be frustrating — whereas just trying to take feedback from a boss is a lot harder. I’m conscientious enough that I’ve always tried to apply direct feedback, and I’ve followed specific instructions I’ve been given with fidelity, of course.

Putting the bottom-line up front isn’t enough

I recently learned that the military has an acronymn — BLUF — but it wasn’t until I heard the phrase “put the most important details up front” that it really clicked for me; I thought a clear subject heading was enough.

It’s not!

One of my favorite old bosses specifically asked for bullet point summaries at the top of otherwise comprehensive and useful emails. I was in the habit of writing down everything I remembered about the context leading up to students getting sent out of class, which was critical for, say, suspension paperwork. I was good at providing a useful subject header — but I wasn’t doing a good enough job of providing a middle-ground “tl;dr” he could skim when he didn’t have time to read the whole email before dealing with the student… mostly because I read very fast; when I was younger, it was hard to come to terms with the idea that someone making four times my salary read at a quarter of my pace.

So until now I was prone to applying that sort of feedback in what now feels like a relatively rote way — by following direction very explicitly, and maybe generalizing a lesson out toward other forms of communication. My boss’ comment made me understand the value of signposting and thesis statements in the introduction and conclusions, which until then mystified me because I always read everything and don’t have much trouble retaining what I’ve read.

Part of growing up is understanding all the ways in which other people aren’t like you. The typical mind fallacy is a pernicious trap.

Empathy is difficult to master but important to practice

Part of being a parent with a full-time job is abruptly having a lot less time and mental bandwidth than I used to; the first time in my life, I’m finally operating from a place where I really get time and attention constraints. I still prefer themed logs over daily notes, but when I fire up my read-it-later app and find myself confronted with 40 articles I really did mean to read, I am a lot more frustrated when the 3 to 6 words in the teaser box are useless. I don’t open articles with titles like “Blog Title: Edition 32” anymore. If all I see is “the intent of this document is to discuss” in the summary metadata, I tend to move on.

In a similar vein, if I'm pinging a busy colleague on a message, I want the message itself to be well crafted and comprehensive — but I’ve become a lot more cognizant of how those first six words might be the only thing that show up in the notification panel on their phone, so I need to make sure that those words are a succinct and accurate overview in their own right.

LLMs don’t default to anything resembling ‘succinct and accurate,’ but — as with a conscientious teenager3 helping out in an unfamiliar kitchen for the first time — you can get them to do what you want if you refine your directions enough times. And in the process, maybe learn a little something about how a manager might feel when trying to get you to communicate in their preferred style.

LLMs can help build communication skills

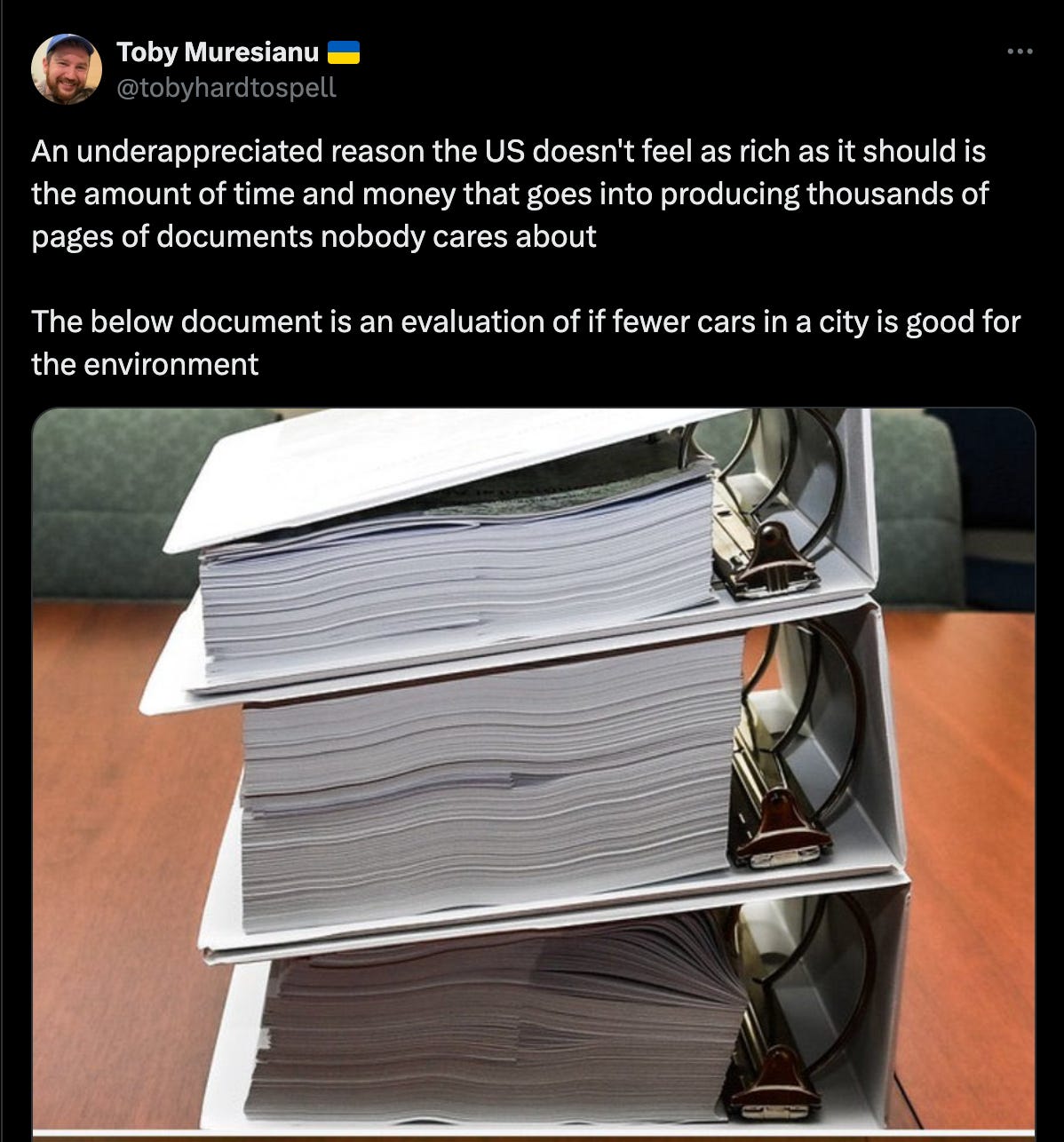

Reflecting on how LLMs have helped my professional growth has made me genuinely wonder if they are going to make people more aware of how they communicate and improve communication — or if, as predicted by

it's just going to generate a bunch of stuff4 that nobody writes and nobody reads.Because despite all the AI doomerism in my social sphere, this is just one small way that the increasing prevalence of large language models is improving my productivity. I think a lot of people in my ‘bubble’ focus a lot on getting large language models to “create” art or at least products — book covers, nonfiction articles, emails that are annoying to write a polite version of — but the most useful things I've done with LLMs are with things that are more me-facing; stuff like translating content into different formats. You can check out my personal take on using LLMs for more about how I use AI for research, summarization, motivation, reformatting, & emergency stories for my kids.

My real question for now, though, is whether the rise of LLMs will ultimately lead to more bureaucratic cruft (😭), or more targeted & blunt communication. We’ve got to be able to do better than this, right?

I work for Readwise now, but my newsletter predates the job and my role there is quality assurance, not marketing. It’s not a terribly glamorous job, but it’s satisfying. If you want to join the team, we’re currently hiring a principal product designer.

After extensive testing, I’ve determined that my favorite childcare comes from college freshmen; they’ve generally got very open schedules, but aren’t looking to work full time, which works great for my weird schedule. Plus, if the baby is napping during one of my regular meetings, they don’t bat at an eye at helping with meal prep or assembling IKEA furniture for me during downtime. They also like to learn, and are less emotionally awkward for me to teach.

Resumes, specifically — click thru for the classic Spider-Man meme about AI generated/reviewed applications, as well as a compelling prediction about the future of tech-related job markets.

Wow; great insights for ways to improve my writing. I do a lot of it professionally and have received many kudos. But it's clear how I can do better. Thanks much.